Welcome to NodeMasterX

Enhanced Trust and Credibility

Improved Accountability

%20(1).png)

Informed Decision-Making

Fostering Innovation

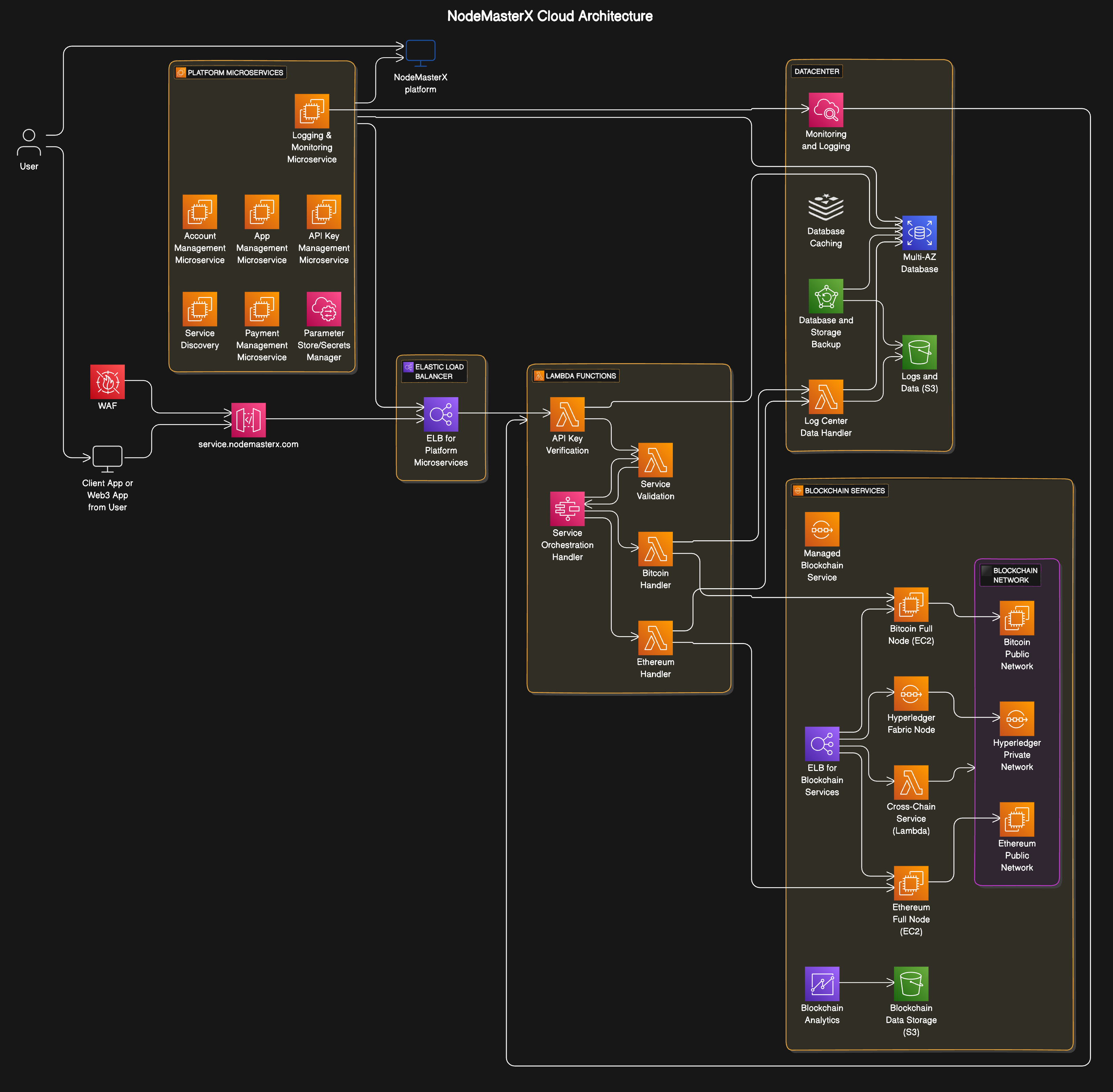

NodeMasterX: Cloud Architecture Design

Introduction

Welcome to NodeMasterX, the premier platform designed for blockchain developers and businesses to harness the power of blockchain technology with ease and efficiency. At NodeMasterX, we provide a robust, secure, and scalable environment that allows users to perform complex blockchain operations and data analysis through our advanced API services. Our platform is engineered to support a wide range of blockchain networks, enabling seamless integration for both development and financial analysis purposes.

This document serves as a comprehensive guide to understanding the NodeMasterX cloud architecture, detailing the underlying infrastructure, services, and processes that make our platform the go-to solution for blockchain development and data analysis.

The Vision Behind NodeMasterX

NodeMasterX was conceived with a clear vision: to simplify blockchain integration for developers and businesses. In the rapidly evolving world of blockchain technology, we recognize the need for a platform that not only provides access to multiple blockchain networks but also offers data analysis capabilities tailored for the financial sector.

Our mission is to empower users with the tools they need to innovate without the hassle of managing complex backend infrastructure. Whether you're a developer building a decentralized application (DApp) or a financial analyst looking to leverage blockchain data, NodeMasterX is your gateway to success.

Platform Features

1. Comprehensive Blockchain Access

NodeMasterX offers access to a variety of blockchain networks through our API services. Users can interact with networks like Bitcoin, Ethereum, and Hyperledger, enabling a wide range of use cases from Web3 development to large-scale data queries.

2. Data Analysis Capabilities

For businesses and financial analysts, NodeMasterX provides powerful tools for data analysis. Our platform supports large data queries, allowing users to extract, analyze, and visualize blockchain data in real time.

3. Secure API Management

Security is at the core of our platform. NodeMasterX employs advanced security measures, including API key verification, service validation, and error handling, ensuring that your operations are protected from unauthorized access.

4. Scalable Infrastructure

Built on AWS, NodeMasterX is designed to scale effortlessly as your needs grow. Our platform can handle increased workloads without compromising performance, making it ideal for both small projects and enterprise-level applications.

5. User-Friendly Management Portal

Our management portal, accessible at platform.nodemasterx.com, offers an intuitive interface for managing your applications, API keys, and subscriptions. Whether you're a seasoned developer or new to blockchain, our portal makes it easy to get started.

How NodeMasterX Works

1. Account Creation and Application Setup

The journey with NodeMasterX begins at our management portal. Users can quickly create an account, after which they can start setting up their applications. Each application is associated with a unique App ID and allows users to generate API keys that grant access to the desired blockchain services.

1.1 User Registration

- Step 1: Visit

platform.nodemasterx.comand register for an account. - Step 2: Verify your email and log in to the platform.

- Step 3: Set up your profile and start creating applications.

1.2 Application Creation

- Step 1: Navigate to the API service section.

- Step 2: Create a new application by providing a name and description.

- Step 3: The platform assigns a unique App ID to your application, which is stored in our secure PostgreSQL database.

1.3 API Key Generation

- Step 1: Within your application, generate an API key.

- Step 2: Each key is associated with your App ID and user ID, ensuring a secure and traceable connection to your services.

- Step 3: The API key status is active by default and can be used immediately to interact with blockchain networks.

2. API Request Handling and Service Interaction

Once you have your API key, you can start making requests to service.nodemasterx.com, our API gateway. This is where the real power of NodeMasterX comes into play.

2.1 API Gateway and Request Handling

- Step 1: Send your request to

service.nodemasterx.comusing your API key. - Step 2: Our AWS API Gateway receives the request and forwards it to the appropriate Lambda function for processing.

- Step 3: The Lambda function verifies the API key by querying our platform database, ensuring that only authorized requests are processed.

2.2 Service Validation and Execution

- Step 1: The Lambda function checks the service type specified in your request (e.g., API_Service for Web3 or Block_Data for large data queries).

- Step 2: Depending on the service type, the request is routed to the corresponding service handler (e.g., Bitcoin, Ethereum).

- Step 3: The service handler validates and processes the request, interacting with the blockchain network to perform the required operation (e.g., retrieving block information).

2.3 Response Handling and Logging

- Step 1: Once the request is processed, the response is sent back to the user's client application via the Lambda function.

- Step 2: The Lambda function also logs the request and response details, including request time, response time, and any limits encountered.

- Step 3: These logs are stored in an S3 bucket and can be accessed by users through the Log Service in our management portal.

3. Subscription and Limits

NodeMasterX operates on a subscription-based model, allowing users to select the plan that best fits their needs.

3.1 Subscription Management

- Step 1: Users can manage their subscriptions directly from the management portal.

- Step 2: Choose from various plans, each offering different API request limits and service access levels.

- Step 3: Subscriptions can be upgraded, renewed, or canceled at any time.

3.2 API Request Limits

- Step 1: Each subscription plan includes a specified limit on API requests.

- Step 2: Once the limit is reached, users can upgrade their subscription or wait for the next billing cycle.

- Step 3: Exceeding the limit without upgrading results in restricted access until the limit is reset.

4. Logging, Monitoring, and Error Handling

At NodeMasterX, transparency and reliability are paramount. Our logging and monitoring systems ensure that all interactions are recorded and can be reviewed by users at any time.

4.1 Comprehensive Logging

- Step 1: Every API request and response is logged, including detailed metadata such as request time, response time, and service type.

- Step 2: Logs are stored securely in S3 buckets and can be accessed through the Log Service in our management portal.

- Step 3: Users can review their log history to monitor API usage, identify issues, and optimize performance.

4.2 Real-Time Monitoring

- Step 1: NodeMasterX employs AWS CloudWatch for real-time monitoring of platform performance.

- Step 2: This includes monitoring API request rates, error rates, and service uptime.

- Step 3: Our monitoring systems ensure that any issues are detected and resolved quickly, minimizing downtime and ensuring a seamless user experience.

4.3 Advanced Error Handling

- Step 1: If an API key is revoked, expired, or invalid, our Lambda functions immediately return an unauthorized error.

- Step 2: Detailed error messages provide users with the information they need to resolve issues quickly.

- Step 3: All errors are logged and included in the user's log history for future reference.

5. Security Measures

Security is a top priority at NodeMasterX. We employ a range of security measures to protect our users' data and ensure the integrity of our platform.

5.1 API Key Security

- Step 1: API keys are securely generated and stored in our PostgreSQL database.

- Step 2: Keys are associated with specific App IDs and user IDs, ensuring that they cannot be used outside of their intended context.

- Step 3: Users can revoke API keys at any time, instantly disabling access to their services.

5.2 Data Encryption

- Step 1: All data transmitted between users and NodeMasterX is encrypted using industry-standard TLS/SSL protocols.

- Step 2: Data stored in our databases and S3 buckets is encrypted at rest, ensuring that it remains secure even in the event of a data breach.

- Step 3: Our encryption protocols are regularly reviewed and updated to maintain the highest levels of security.

5.3 Firewall Protection

- Step 1: NodeMasterX employs a Web Application Firewall (WAF) to protect against common web-based attacks, such as SQL injection and cross-site scripting (XSS).

- Step 2: The WAF is configured to block suspicious activity and prevent unauthorized access to our platform.

- Step 3: Regular security audits ensure that our firewall rules are up-to-date and effective.

In-Depth Exploration of NodeMasterX Architecture Components

1. AWS API Gateway

1.1 Introduction to API Gateway

AWS API Gateway is a managed service that enables developers to create, publish, maintain, monitor, and secure APIs at any scale. It acts as the front door for applications to access data, business logic, or functionality from your backend services. In the NodeMasterX platform, the API Gateway plays a crucial role in managing and processing requests made by clients (users or applications) to access various blockchain services.

1.2 Core Functions of API Gateway

1.2.1 Request Handling and Routing

API Gateway serves as the entry point for all API requests to the NodeMasterX services hosted at service.nodemasterx.com. When a client makes a request, the API Gateway intercepts it, validates the request, and routes it to the appropriate backend service, typically an AWS Lambda function. This routing is based on the API Gateway's configuration, which maps specific paths and HTTP methods (GET, POST, etc.) to corresponding backend integrations.

1.2.2 Request Validation

One of the critical responsibilities of the API Gateway is to validate incoming requests. This validation can include checking the structure of the request body, the presence and format of required headers, and ensuring that required parameters are included. In NodeMasterX, this step helps ensure that only well-formed requests reach the backend services, reducing the risk of errors and ensuring that invalid requests are rejected early.

1.2.3 Security Enforcement

API Gateway provides several layers of security for API requests. In the context of NodeMasterX, it plays a significant role in:

- Authentication and Authorization: API Gateway can integrate with AWS Identity and Access Management (IAM) or other identity providers to authenticate requests. It can also enforce authorization by ensuring that the requester has the necessary permissions to access the requested resource.

- API Key Management: NodeMasterX uses API Gateway to manage and validate API keys. Each request must include a valid API key, which the Gateway checks against its stored configuration. Invalid or missing API keys result in immediate rejection of the request.

- Rate Limiting: To protect against abuse and ensure fair usage, API Gateway allows the implementation of rate limits. For NodeMasterX, this means limiting the number of API calls a user can make in a given timeframe, based on their subscription plan.

1.2.4 Throttling and Caching

API Gateway offers built-in throttling mechanisms to control the rate at which clients can call the API. This prevents overloading the backend services and ensures that the system remains responsive even under heavy load. Additionally, API Gateway can cache responses to improve performance, reducing the need for repeated processing of identical requests.

1.3 Integration with Backend Services

API Gateway seamlessly integrates with various backend services, including AWS Lambda, Amazon EC2, and others. In NodeMasterX:

- Lambda Integration: API Gateway routes requests to specific Lambda functions based on the request path and method. These Lambda functions then handle the logic for interacting with blockchain networks, querying databases, or performing other operations.

- Direct Integration with AWS Services: In some cases, API Gateway might directly interact with other AWS services (like S3 or DynamoDB) without involving a Lambda function. This is particularly useful for static content delivery or data retrieval that doesn't require custom processing.

1.4 Monitoring and Logging

API Gateway provides extensive monitoring and logging capabilities through AWS CloudWatch. This includes:

- Metrics Collection: API Gateway automatically collects and reports metrics like request count, latency, and error rates. These metrics are crucial for monitoring the health and performance of NodeMasterX APIs.

- Access Logging: Detailed access logs capture information about each request, including the source IP, user agent, request path, and response status. These logs are invaluable for debugging, security audits, and usage analysis.

1.5 Challenges and Considerations

While API Gateway offers many benefits, there are also challenges to consider:

- Latency: Because API Gateway acts as a proxy between clients and backend services, it introduces some latency. For most applications, this is negligible, but it's something to be aware of, especially in high-performance environments.

- Complexity in Configuration: API Gateway is highly configurable, which can be both an advantage and a disadvantage. The flexibility can lead to complex configurations that are challenging to manage and troubleshoot.

- Cost Considerations: API Gateway pricing is based on the number of requests processed, the amount of data transferred, and additional features like caching. For high-traffic applications, these costs can add up quickly, so careful cost management is necessary.

2. AWS Lambda

2.1 Introduction to AWS Lambda

AWS Lambda is a serverless compute service that lets you run code without provisioning or managing servers. Lambda automatically scales your application by running code in response to events, and it only charges for the compute time consumed. In NodeMasterX, AWS Lambda is used extensively for processing API requests, performing blockchain operations, and managing backend logic.

2.2 Core Functions of AWS Lambda in NodeMasterX

2.2.1 Event-Driven Architecture

Lambda functions in NodeMasterX are triggered by events, such as incoming API requests from API Gateway. This event-driven model is ideal for handling operations that occur sporadically or on-demand, as it eliminates the need to maintain idle servers, reducing costs and simplifying scalability.

2.2.2 Request Processing

When an API request reaches the Lambda function via API Gateway, the function's handler code is executed. This code can perform a variety of tasks, such as:

- Blockchain Interactions: The Lambda function may query a blockchain network, send transactions, or retrieve data blocks, depending on the API request.

- Database Operations: Lambda functions often interact with the PostgreSQL database to verify API keys, retrieve user information, log requests, or store query results.

- Service Orchestration: Lambda functions can also act as orchestrators, coordinating multiple backend services to fulfill complex requests. For example, a request to retrieve a user's transaction history might involve querying both the blockchain and the PostgreSQL database.

2.2.3 Error Handling and Logging

Lambda functions in NodeMasterX are designed with robust error handling mechanisms. If a function encounters an error (e.g., an invalid API key, a timeout while querying the blockchain, or a database connection issue), it can:

- Return Detailed Error Messages: Lambda can return custom error messages to the API Gateway, which then passes them back to the client. This ensures that users receive meaningful feedback and can take corrective action.

- Log Errors: Lambda functions automatically log errors and other relevant information to CloudWatch Logs, providing a record of what went wrong. These logs are critical for debugging and improving the system's resilience.

2.3 Scalability and Performance

One of Lambda's most significant advantages is its ability to scale automatically. When multiple requests arrive simultaneously, AWS Lambda can spin up additional instances of the function to handle the load. This auto-scaling ensures that NodeMasterX can maintain performance and responsiveness even during periods of high demand.

2.4 Integration with Other AWS Services

Lambda integrates seamlessly with other AWS services, making it a versatile component of the NodeMasterX architecture:

- S3 Integration: Lambda functions can be triggered by events in S3, such as the creation of new log files or the arrival of new data for processing.

- RDS Integration: Lambda functions can connect to the PostgreSQL database hosted on Amazon RDS to perform SQL queries, insert data, or update records.

- SNS and SQS Integration: Lambda can publish messages to Amazon SNS (Simple Notification Service) for notifications or send messages to SQS (Simple Queue Service) for queued processing.

2.5 Security Considerations

Lambda functions operate within a secure environment, with each function running in its isolated context. However, there are additional security practices to consider:

- IAM Roles and Policies: Lambda functions should be assigned specific IAM roles that grant only the permissions needed for their operation. This principle of least privilege minimizes the risk of unauthorized access to other AWS resources.

- Environment Variables: Sensitive information, such as API keys or database credentials, should be stored in Lambda environment variables. These variables are encrypted at rest and only accessible to the function code.

2.6 Cost Efficiency

AWS Lambda charges based on the number of requests and the compute time consumed. This model is highly cost-efficient for NodeMasterX, as it eliminates the need to pay for idle server capacity. Functions that execute quickly and infrequently incur minimal costs, making Lambda an ideal choice for the platform's event-driven architecture.

2.7 Challenges and Considerations

Despite its many advantages, using Lambda does come with challenges:

- Cold Start Latency: The first invocation of a Lambda function can experience a cold start delay, where the function takes longer to start up because it needs to initialize a new execution environment. This latency can be mitigated by strategies like keeping functions warm (pre-warming) or optimizing the function code.

- Execution Limits: Lambda functions have execution time limits (up to 15 minutes) and memory constraints (up to 10 GB). While sufficient for most tasks, long-running or memory-intensive operations may require alternative solutions, such as running on Amazon EC2.

3. PostgreSQL Database (Amazon RDS)

3.1 Introduction to PostgreSQL and Amazon RDS

PostgreSQL is a powerful, open-source object-relational database system known for its robustness, extensibility, and compliance with SQL standards. It supports a wide range of data types, advanced querying capabilities, and strong transactional integrity. In NodeMasterX, PostgreSQL serves as the primary data store, managing everything from user information and API keys to service logs and analytics data.

Amazon RDS (Relational Database Service) provides a managed environment for running PostgreSQL, handling routine database tasks such as backups, patching, and scaling, allowing the NodeMasterX team to focus on developing the application rather than managing the database infrastructure.

3.2 Core Functions of PostgreSQL in NodeMasterX

3.2.1 User and API Key Management

The PostgreSQL database stores all critical user data, including profiles, subscription details, and API keys. Whenever a client makes an API request, the system queries PostgreSQL to verify the API key's validity, check the user's subscription status, and retrieve any other necessary information.

- Data Integrity: PostgreSQL's support for ACID (Atomicity, Consistency, Isolation, Durability) transactions ensures that all operations involving user data and API keys are reliable and consistent. For example, when a user updates their subscription, the transaction will either fully complete or fully roll back, ensuring that the database remains in a valid state.

3.2.2 Service Logs and Analytics

NodeMasterX leverages PostgreSQL to store detailed logs of all service interactions. This includes records of API requests, responses, execution times, errors, and other relevant metadata. These logs are invaluable for:

- Troubleshooting: If a user reports an issue, developers can query the logs to trace the request path, identify any errors, and determine what went wrong.

- Analytics and Reporting: PostgreSQL's advanced querying capabilities allow the team to generate insights from the log data. For example, they can analyze usage patterns, identify popular services, and track performance over time.

3.2.3 Data Archiving and Retention

To manage the volume of data over time, NodeMasterX uses PostgreSQL for data archiving and retention. The system can automatically move older logs and records to archive tables or even export them to Amazon S3 for long-term storage. This helps maintain optimal performance in the primary database while ensuring that historical data remains accessible if needed.

3.3 Scalability and Performance

PostgreSQL on Amazon RDS offers several features that enhance scalability and performance for NodeMasterX:

- Read Replicas: To handle increased read traffic, NodeMasterX can use PostgreSQL read replicas. These replicas can serve read-only queries, offloading some of the load from the primary database and improving overall performance.

- Auto-Scaling Storage: RDS automatically scales the storage capacity of the PostgreSQL database as the data grows. This ensures that NodeMasterX never runs out of storage space and can continue operating smoothly.

- Performance Tuning: PostgreSQL offers a wide range of tuning options, including indexing, query optimization, and caching. These tools help ensure that the database performs efficiently even as the volume of data and the complexity of queries increase.

3.4 Security and Compliance

Security is a top priority for the PostgreSQL database in NodeMasterX:

- Encryption: Data at rest in PostgreSQL is encrypted using AWS-managed keys through the RDS service. Additionally, data in transit between the application and the database is encrypted using SSL, protecting it from eavesdropping and tampering.

- Access Controls: PostgreSQL supports fine-grained access controls, allowing NodeMasterX to define roles and permissions that limit access to specific tables, views, or even individual rows and columns. This ensures that only authorized users can access sensitive data.

- Audit Logging: For compliance and security auditing, PostgreSQL can be configured to log all access and modification events. These audit logs are crucial for tracking unauthorized access attempts or changes to critical data.

3.5 Backup and Recovery

Amazon RDS automates the backup and recovery process for PostgreSQL, providing several options:

- Automated Backups: RDS automatically performs daily backups of the PostgreSQL database and retains them for a user-defined retention period. These backups can be used to restore the database to a specific point in time in the event of data loss or corruption.

- Manual Snapshots: NodeMasterX can also create manual snapshots of the database at any time. These snapshots are particularly useful before performing significant updates or changes, providing a recovery point if anything goes wrong.

3.6 Challenges and Considerations

While PostgreSQL and Amazon RDS offer robust and reliable database services, there are some challenges to consider:

- Cost Management: The cost of running a PostgreSQL database on RDS can increase with the size of the database, the number of read replicas, and the use of additional features like Multi-AZ deployments. Careful cost monitoring and optimization are necessary to ensure that the service remains affordable.

- Complexity in Advanced Features: PostgreSQL offers a wide range of advanced features, but configuring and tuning them effectively requires in-depth knowledge and expertise. For teams without a dedicated database administrator, this can be challenging.

4. Amazon S3

4.1 Introduction to Amazon S3

Amazon Simple Storage Service (S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. In NodeMasterX, Amazon S3 is used for storing various types of data, including logs, backups, and static assets.

4.2 Core Functions of Amazon S3 in NodeMasterX

4.2.1 Log Storage and Management

One of the primary uses of Amazon S3 in NodeMasterX is for storing logs. Every API request, service execution, and database transaction generates logs that are stored in S3. This provides a durable and scalable solution for retaining vast amounts of log data.

- Cost-Effective Storage: S3 offers different storage classes (e.g., Standard, Infrequent Access, Glacier) that allow NodeMasterX to optimize storage costs based on how frequently the logs need to be accessed.

- Lifecycle Policies: NodeMasterX can define lifecycle policies in S3 to automatically transition older logs to cheaper storage classes or delete them after a certain period. This helps manage storage costs and ensures that the log data does not grow indefinitely.

4.2.2 Backup and Archiving

In addition to logs, Amazon S3 is also used for storing database backups and other critical data that needs to be archived. The durability of S3 (designed for 99.999999999% durability) ensures that this data is safe and recoverable when needed.

- Versioning: S3 supports versioning, which allows NodeMasterX to keep multiple versions of an object. This is particularly useful for backups, where each version represents a different snapshot of the database or file system.

- Cross-Region Replication: To enhance data resilience, NodeMasterX can enable cross-region replication, where data stored in one S3 region is automatically replicated to another region. This protects against regional failures and ensures data availability even in disaster scenarios.

4.2.3 Static Content Delivery

For any static content, such as documentation, images, or configuration files, NodeMasterX uses Amazon S3. This content can be served directly from S3, leveraging its integration with Amazon CloudFront for low-latency content delivery.

- Public and Private Access: S3 allows fine-grained access control, meaning that some objects (like public documentation) can be made publicly accessible, while others (like internal configuration files) are restricted to authorized users.

4.3 Scalability and Performance

Amazon S3 is highly scalable, capable of storing an unlimited amount of data. It automatically handles the infrastructure management, including scaling the storage capacity and managing the network bandwidth to meet the demands of the application.

- High Throughput: S3 can handle thousands of requests per second, making it suitable for high-traffic applications like NodeMasterX. This ensures that logs and other data are written to and retrieved from S3 quickly and efficiently.

- Optimized Data Transfer: S3 supports multi-part uploads, which allow large files to be uploaded in parallel, improving transfer speed and reliability. Similarly, large objects can be retrieved in parts, optimizing performance for large data sets.

4.4 Security and Compliance

Security is a critical aspect of using Amazon S3, especially for sensitive data like logs and backups:

- Encryption: Data stored in S3 can be encrypted using AWS-managed keys (SSE-S3) or customer-managed keys (SSE-C or SSE-KMS). NodeMasterX can enforce encryption for all objects to ensure that data is protected both at rest and in transit.

- Access Control Policies: S3 supports bucket policies and access control lists (ACLs) that allow NodeMasterX to define who can access specific buckets or objects. These policies can be as granular as necessary, limiting access based on user roles, IP addresses, or other criteria.

- Logging and Auditing: S3 provides access logs that track all requests made to the buckets. These logs are crucial for auditing and compliance, allowing NodeMasterX to monitor access and detect any unauthorized attempts.

4.5 Cost Efficiency

Amazon S3's pricing model is based on the amount of data stored, the number of requests made, and the data transferred out of the S3 buckets. NodeMasterX can optimize these costs through:

- Storage Classes: By selecting the appropriate storage class for each use case (e.g., Infrequent Access for logs, Glacier for archives), NodeMasterX can significantly reduce storage costs.

- Lifecycle Policies: Automating data transitions between storage classes using lifecycle policies ensures that NodeMasterX is not paying for expensive storage when cheaper options are available.

4.6 Challenges and Considerations

While S3 is a powerful storage solution, it does come with its own set of challenges:

- Data Management: Managing large amounts of data across multiple buckets and regions can become complex. Implementing an effective strategy for organizing and accessing this data is crucial to avoid operational inefficiencies.

- Cost Management: Without careful management, S3 costs can quickly escalate, especially with large-scale applications that generate significant amounts of log and backup data. Regular cost monitoring and optimization are necessary to keep expenses in check.

5. Amazon CloudFront

5.1 Introduction to Amazon CloudFront

Amazon CloudFront is a content delivery network (CDN) service that securely delivers data, videos, applications, and APIs to customers globally with low latency and high transfer speeds. In NodeMasterX, CloudFront plays a critical role in ensuring fast and reliable delivery of static assets, APIs, and other content to users around the world.

5.2 Core Functions of Amazon CloudFront in NodeMasterX

5.2.1 Content Delivery Optimization

NodeMasterX uses Amazon CloudFront to deliver static content, such as images, stylesheets, and JavaScript files, as well as API responses. By caching this content at edge locations around the world, CloudFront significantly reduces latency and improves the user experience.

- Global Edge Locations: CloudFront operates a global network of edge locations, ensuring that content is served from a location geographically close to the user. This reduces the time it takes for content to reach the user and improves the overall performance of the application.

- Content Caching: By caching content at edge locations, CloudFront reduces the load on the origin servers (such as S3 or the API Gateway), as repeated requests for the same content can be served directly from the cache. This not only improves performance but also reduces the cost associated with serving content from the origin.

5.2.2 Security and DDoS Protection

Amazon CloudFront provides multiple layers of security to protect NodeMasterX from threats such as Distributed Denial of Service (DDoS) attacks:

- AWS Shield: Integrated with AWS Shield, CloudFront provides automatic protection against DDoS attacks. This ensures that NodeMasterX remains available even during high-volume attacks.

- SSL/TLS Encryption: CloudFront supports SSL/TLS encryption, ensuring that all content delivered to users is secure. NodeMasterX can configure CloudFront to require HTTPS for all requests, protecting sensitive data in transit.

- Web Application Firewall (WAF) Integration: CloudFront integrates with AWS WAF, allowing NodeMasterX to define custom security rules that block common attack patterns, such as SQL injection or cross-site scripting (XSS).

5.3 Scalability and Performance

Amazon CloudFront is designed to handle the demands of high-traffic websites and applications. It scales automatically to handle spikes in traffic, ensuring that NodeMasterX can accommodate users during peak times without any degradation in performance.

- Elastic Load Balancing: CloudFront works seamlessly with AWS Elastic Load Balancing (ELB) to distribute incoming traffic across multiple Amazon EC2 instances, further enhancing scalability and reliability.

- Lambda@Edge: CloudFront supports running serverless functions at edge locations using Lambda@Edge. NodeMasterX can use this feature to customize content delivery based on user preferences, modify requests and responses on the fly, or even perform A/B testing without impacting the origin servers.

5.4 Cost Efficiency

CloudFront's pricing is based on the amount of data transferred out of the CDN, the number of requests, and the geographic region from which the data is served. NodeMasterX can optimize these costs by:

- Optimizing Cache Behavior: By fine-tuning the cache behavior, such as setting appropriate cache expiration times, NodeMasterX can reduce the number of requests to the origin and lower the associated data transfer costs.

- Monitoring Usage: Regularly monitoring CloudFront usage and performance metrics helps NodeMasterX identify opportunities for cost savings, such as optimizing content delivery strategies or adjusting cache settings.

5.5 Challenges and Considerations

While Amazon CloudFront offers significant benefits, there are some challenges to consider:

- Configuration Complexity: Setting up and optimizing CloudFront distributions can be complex, especially for applications with diverse content types and varying performance requirements. Ensuring that the distribution is configured correctly requires careful planning and expertise.

- Cache Invalidation: Managing cache invalidation is crucial for ensuring that users receive the most up-to-date content. However, invalidating cache entries can be costly and may introduce latency if not managed properly.

6. Amazon Route 53

6.1 Introduction to Amazon Route 53

Amazon Route 53 is a scalable and highly available Domain Name System (DNS) web service designed to route end users to internet applications. In NodeMasterX, Route 53 is responsible for managing domain names, routing traffic to AWS resources, and ensuring that users can reliably access the platform.

6.2 Core Functions of Amazon Route 53 in NodeMasterX

6.2.1 DNS Management

Route 53 manages the DNS records for NodeMasterX, translating human-readable domain names into IP addresses that computers use to connect to each other. This is essential for ensuring that users can access NodeMasterX services via easily recognizable domain names, such as nodemasterx.com.

- DNS Zones: Route 53 allows NodeMasterX to create DNS zones for different parts of the application, enabling fine-grained control over DNS settings. For example, separate zones can be created for the main platform, API services, and documentation.

- Routing Policies: Route 53 supports various routing policies, including simple, weighted, latency-based, and failover routing. NodeMasterX can use these policies to optimize the distribution of traffic across different AWS regions or instances, improving performance and reliability.

6.2.2 Health Checks and Monitoring

Route 53 provides health checks for monitoring the status of AWS resources. NodeMasterX can configure health checks to automatically route traffic away from unhealthy instances or regions, ensuring that users are directed to operational endpoints.

- Automatic Failover: In the event of a failure in one region, Route 53 can automatically failover to another region, minimizing downtime and maintaining service availability.

- DNS Failover: DNS failover ensures that if the primary endpoint becomes unavailable, Route 53 will route users to a backup endpoint, such as a disaster recovery site or a secondary region.

6.3 Scalability and Performance

Route 53 is designed to handle large volumes of DNS queries, making it suitable for high-traffic applications like NodeMasterX. It scales automatically to accommodate traffic spikes, ensuring that DNS queries are resolved quickly and efficiently.

- Global Network: Route 53 operates on a global network of DNS servers, reducing the latency of DNS resolution for users worldwide. This ensures that users can access NodeMasterX services without delay, regardless of their location.

6.4 Security and Compliance

Security is a key consideration for DNS management, as DNS is a critical component of internet infrastructure:

- DNSSEC: Route 53 supports DNSSEC (Domain Name System Security Extensions), which adds an extra layer of security by ensuring that the DNS responses have not been tampered with. NodeMasterX can enable DNSSEC to protect against certain types of DNS attacks, such as cache poisoning.

- Access Controls: Route 53 integrates with AWS Identity and Access Management (IAM), allowing NodeMasterX to define who can manage DNS settings and access DNS records. This ensures that only authorized users can make changes to critical DNS configurations.

6.5 Cost Efficiency

Route 53 pricing is based on the number of hosted zones, the number of DNS queries, and the types of routing policies used. NodeMasterX can optimize these costs by:

- Efficient Zone Management: By consolidating DNS records and using a minimal number of hosted zones, NodeMasterX can reduce the cost associated with managing DNS records.

- Monitoring Query Volume: Regularly monitoring DNS query volume helps NodeMasterX identify patterns in traffic and optimize DNS configurations to minimize costs.

6.6 Challenges and Considerations

While Route 53 provides a robust DNS management solution, there are challenges to consider:

- Complexity in Routing Policies: Configuring advanced routing policies, such as weighted or latency-based routing, can be complex and requires a thorough understanding of the application's architecture and traffic patterns.

- Managing DNS Failover: While DNS failover is a powerful feature, it requires careful planning and testing to ensure that failover scenarios are handled smoothly without introducing additional latency or downtime.

7. AWS Identity and Access Management (IAM)

7.1 Introduction to AWS IAM

AWS Identity and Access Management (IAM) is a service that helps control access to AWS resources securely. In NodeMasterX, IAM plays a crucial role in managing who can access the AWS resources and what actions they can perform, ensuring that the platform remains secure and compliant with organizational policies.

7.2 Core Functions of AWS IAM in NodeMasterX

7.2.1 User and Group Management

IAM allows NodeMasterX to create and manage AWS users and groups, providing fine-grained control over who can access the AWS environment. Each user can be assigned specific permissions based on their role within the organization.

- User Management: Individual users, such as developers, administrators, or auditors, can be created in IAM, each with their own set of credentials. NodeMasterX can enforce strong password policies and multi-factor authentication (MFA) to enhance security.

- Group Management: Users with similar roles or responsibilities can be grouped together, making it easier to manage their permissions. For example, a group for developers may have access to development environments, while an admin group has access to production resources.

7.2.2 Policy Management

IAM policies define the permissions granted to users, groups, and roles. NodeMasterX can create custom policies that specify exactly what actions are allowed or denied for a particular resource.

- Managed Policies: AWS provides a set of managed policies that cover common use cases, such as granting read-only access to S3 or full access to EC2 instances. NodeMasterX can use these managed policies as a starting point and customize them as needed.

- Custom Policies: For more granular control, NodeMasterX can create custom policies that specify exact permissions. For example, a custom policy might allow a user to start and stop EC2 instances but not terminate them.

7.3 Roles and Cross-Account Access

IAM roles allow NodeMasterX to grant permissions to AWS services or users in other AWS accounts without needing to share long-term credentials.

- Service Roles: AWS services, such as Lambda or EC2, can assume IAM roles to access other AWS resources on behalf of NodeMasterX. This ensures that each service has only the permissions it needs to function, following the principle of least privilege.

- Cross-Account Access: NodeMasterX can use IAM roles to grant access to AWS resources in another AWS account. This is useful for scenarios where NodeMasterX collaborates with other organizations or operates multiple AWS accounts.

7.4 Security Best Practices

IAM is central to the security of NodeMasterX's AWS environment. Implementing IAM best practices is crucial for protecting sensitive data and resources:

- Least Privilege: Users and roles should be granted the minimum permissions necessary to perform their tasks. This reduces the risk of accidental or malicious actions that could compromise the platform.

- Multi-Factor Authentication (MFA): Enforcing MFA for all users, especially those with elevated privileges, adds an extra layer of security to the AWS environment.

- Regular Audits: Regularly auditing IAM policies, user activities, and access logs helps ensure that IAM configurations remain secure and compliant with organizational policies.

7.5 Cost Efficiency

IAM itself is a free service, but it plays a key role in managing the security and compliance of AWS resources, which can indirectly impact costs:

- Optimizing Resource Access: By enforcing least privilege and regularly reviewing permissions, NodeMasterX can prevent unauthorized actions that could lead to unnecessary resource consumption or security breaches.

- Automating Access Management: Using IAM roles and policies to automate access management reduces the administrative overhead and helps ensure that resources are used efficiently.

7.6 Challenges and Considerations

While IAM provides powerful tools for managing access to AWS resources, there are challenges to consider:

- Complexity in Policy Management: Creating and managing IAM policies can be complex, especially as the number of users, groups, and roles grows. Careful planning and documentation are necessary to avoid conflicts and ensure that policies are applied correctly.

- Managing Temporary Credentials: While IAM roles allow for temporary access, managing the lifecycle of these credentials and ensuring they are rotated regularly can be challenging.

8. Conclusion

This document provides an in-depth overview of the AWS cloud architecture for NodeMasterX, highlighting key components and their roles in ensuring a scalable, secure, and efficient platform. By leveraging AWS services such as EC2, RDS, S3, CloudFront, Route 53, and IAM, NodeMasterX can deliver a robust blockchain API service that meets the needs of developers, businesses, and financial institutions. As the platform continues to evolve, it is essential to regularly review and optimize the architecture to address new challenges and opportunities in the rapidly changing blockchain landscape.

.png)